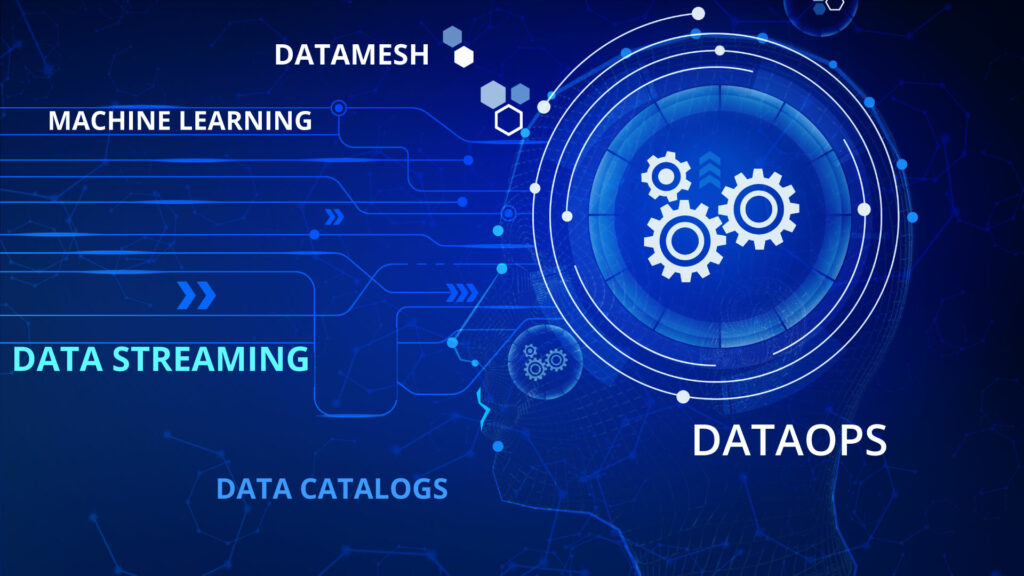

Top 5 Data Engineering Techniques in 2023 Data engineering plays a pivotal role in unlocking the true value of data. From collecting and organising vast amounts of information to building robust data pipelines, it is a complex and vital capability that is becoming more prevalent in today’s complex technology world. There are various intricacies in data engineering, while exploring its challenges, techniques, and the crucial role it plays in enabling data-driven decision making. In this blog post, we explore the top 5 trending data engineering techniques that are expected to make a significant impact in 2023. TL Consulting see Data engineering as an essential discipline that plays a critical role in maximising the value of key data assets. In recent years, several trends and technologies have emerged, shaping the field of data engineering, and offering new opportunities for businesses to harness the power of their data. These techniques enable better and more efficient management of data, unlocking valuable insights and helping enable innovation in a more targeted manner. Since Data engineering is a rapidly evolving domain, there is a continuous need to introduce new data engineering techniques and technologies to handle the increasing volume, variety, and velocity of data. Data Engineering Techniques DataOps One such trend is DataOps, an approach that focuses on streamlining and automating data engineering processes leveraging agile software engineering and DevOps. By implementing DataOps principles, organisations can achieve collaboration, agility, and continuous integration and delivery in their data operations. This approach enables faster data processing and analysis by automating data pipelines, version controlling data artefacts, and ensuring the reproducibility of data processes aligning to DevOps and CICD practices. DataOps improves quality, reduces time-to-insights, and enhances collaboration across data teams while promoting a culture of continuous improvement. DataMesh Another significant trend is Data Mesh, which addresses the challenges of scaling data engineering in large enterprises. DataMesh emphasises domain-oriented ownership of data and treats data as a product. By adopting DataMesh, organisations can establish cross-functional data teams, where each team is responsible for a specific domain and the associated data products. This approach promotes “self-service” data access through a data platform capability, empowering domain experts to manage and govern their data. Furthermore, as the data mesh gains adoption and evolves, with each team that shares their data as products, enabling data-driven innovation. Data Mesh enables scalability, agility, and improved data quality by distributing data engineering responsibilities across the organisation. Data Streaming Real-time data processing has also gained prominence with the advent of data streaming technologies. Data streaming allows organisations to process and analyse data as it arrives, enabling immediate insights and the ability to respond quickly to dynamic business conditions. Platforms like Apache Kafka, Apache Flink, Azure Stream Analytics and Amazon Kinesis provide scalable and fault-tolerant streaming capabilities. Data engineers leverage these technologies to build real-time data pipelines, facilitating real-time analytics, event-driven applications, and monitoring systems to further. This type of capability can lead to optimised real-time stream processing and can gain valuable insights into understanding of customer behaviours and trends. These insights can help you make timely and informed decisions to drive your business growth. Machine Learning The intersection of data engineering and machine learning engineering has become increasingly important. Machine learning engineering focuses on the deployment and operationalisation of machine learning models at scale. Data engineers collaborate with data scientists to develop scalable pipelines that automate the training, evaluation, and deployment of machine learning models. Technologies like TensorFlow Extended (TFX), Kubeflow, and MLflow are utilised to operationalise and manage machine learning workflows effectively. Real-time data streaming offers numerous benefits and empowers you to make informed business decisions. Data Catalogs Lastly, from our experience, Data Catalogs and metadata management solutions have become crucial for managing and discovering data assets. As data volumes grow, organising and governing data effectively becomes challenging. Data cataloguing enables users to search and discover relevant datasets and helps create a single source of knowledge for understanding business data. Metadata management solutions facilitate data lineage tracking, data quality monitoring, and data governance, ensuring data assets are well-managed and trusted. Data cataloguing accelerates analysis by minimising the time and effort that analysts spend finding and preparing data. These trends and technology advancements are reshaping the data engineering landscape, providing organisations with opportunities to optimise their data assets, accelerate insights, and make data-driven decisions with confidence. By embracing these developments, understanding your data assets and associated value, can lead to smarter informed business decisions. By embracing these trending techniques, organisations can transform their data engineering capabilities to enable some of the following benefits: Accelerated data-driven decision-making. Enhanced customer insights, transparency and understanding of customer behaviours. Improved agility and responsiveness to market trends. Increased operational efficiency and cost savings. Mitigated risks through robust data governance and security measures. Data engineering is vital for optimising organisational data assets since these are an important cornerstone of any business. It ensures data quality, integration, and accessibility, enabling effective data analysis and decision-making. By transforming raw data into valuable insights, data engineering empowers organisations to maximize the value of their data assets and gain a competitive edge in the digital landscape. TL Consulting specialises in data engineering techniques and solutions that drive transformative value for businesses enabling the above benefits. We leverage our expertise to design and implement robust data pipelines, optimize data storage and processing, and enable advanced analytics. Partner with us to unlock the full potential of your data and make data-driven decisions with confidence. Visit TL Consulting’s data services page to learn more about our service capabilities and send us an enquiry if you’d like to learn more about how our dedicated consultants can help you.