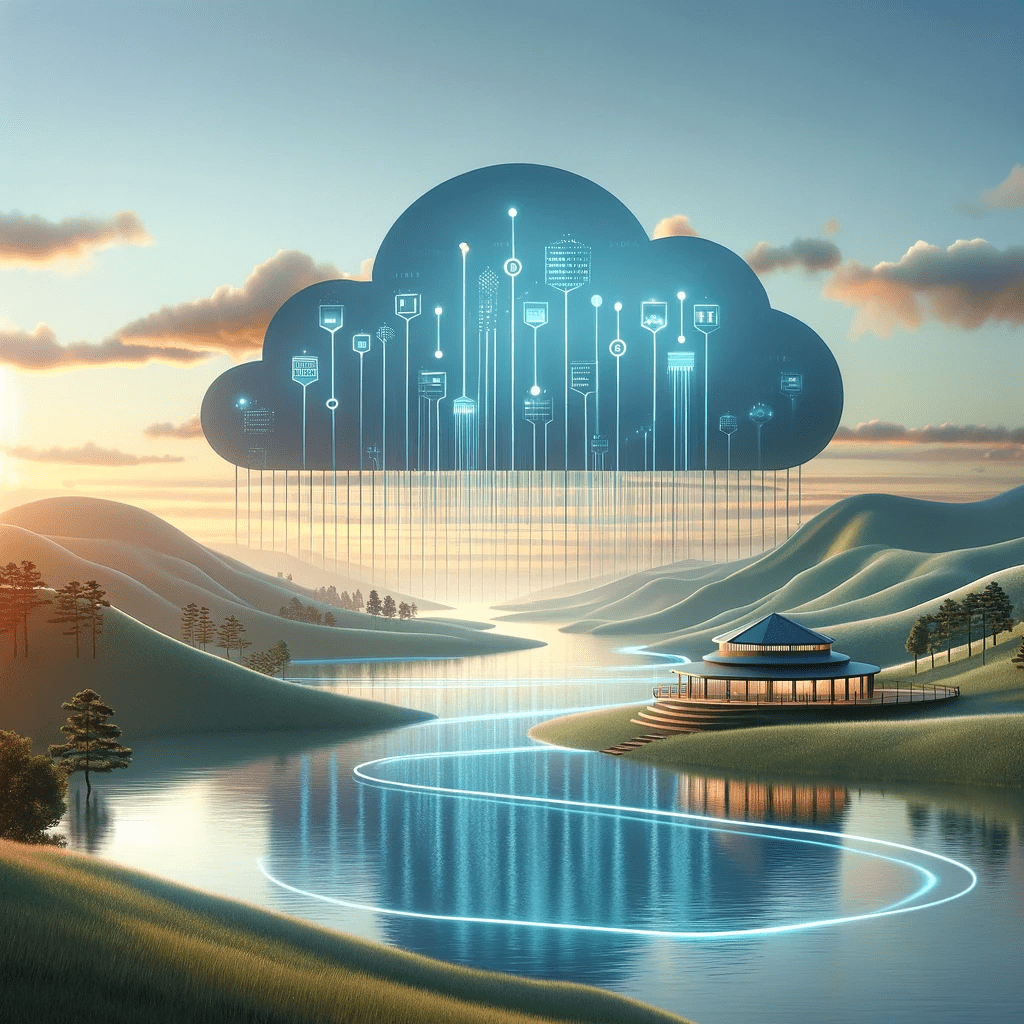

In the ever-evolving landscape of data management, traditional centralised approaches often fall short of addressing the challenges posed by the increasing scale and complexity of modern data ecosystems. Enter Data Mesh, a paradigm shifts in data architecture that reimagines data as a product and decentralises data ownership and architecture. In this technical blog, we aim to start decoding Data Mesh, exploring its key concepts, principles, and market insights. What is Data Mesh? At its core, the Data Mesh is a sociotechnical approach to building a decentralised data architecture. Think of it as a web of interconnected data products owned and served by individual business domains. Each domain team owns its data, from ingestion and transformation to consumption and analysis. This ownership empowers them to manage their data with agility and cater to their specific needs. Key Principles of Data Mesh: The following diagram illustrates an example modern data ecosystem hosted on Microsoft Azure that various business domains can operationalise, govern and own independently to serve their own data analytics use cases. Challenges and Opportunities: Despite these challenges, the opportunities outweigh the hurdles. The Data Mesh offers unparalleled benefits, including: Benefits of Adopting a Data Mesh: Future Trends and Considerations: The Data Mesh is more than just a trendy architectural concept; it’s rapidly evolving into a mainstream approach for managing data in the digital enterprise. To truly understand its significance, let’s delve into some key market insights: Growing Market Value: Conclusion: In conclusion, Data Mesh represents a paradigm shift in how organisations approach data architecture and management. By treating data as a product and decentralising ownership, Data Mesh addresses the challenges of scale, complexity, and agility in modern data ecosystems. Implementing Data Mesh requires a strategic approach, embracing cultural change, and leveraging the right set of technologies to enable decentralised, domain-oriented data management. As organisations continue to grapple with the complexities of managing vast amounts of data, Data Mesh emerges as a promising framework to navigate this new frontier.